This past fall, I had the opportunity to intern on the relatively new Bulk I/O team at Cockroach Labs. Our team’s focus is to improve bulk data operations on CockroachDB. My work involved creating a better experience when importing or backing up data without using an external storage service like Amazon’s S3 or Azure’s Blob Service. CockroachDB supports interacting with files in a node’s local storage system, through a feature called nodelocal. However, this has caused confusion and misuse due to its implementation, and the project I took on involved refining the user experience around nodelocal.

How CockroachDB handles external storage

To understand my project, it’s important to first gain some background knowledge about how CockroachDB interacts with external storage systems. Bulk data operations, such as IMPORT, BACKUP, or RESTORE often need to interact with external storage systems.

Let’s take IMPORT as an example. If you want to import data into your cluster, we provide a few options for you to choose from. The first and the recommended option is to use a cloud provider’s storage system. Your import statement would look something like:

The second option we provide is a similar mechanism to read the file from an HTTP server, which you could serve locally, off your computer:

A third option, is to use what we call nodelocal: reading and writing files in a directory on node(s)’s local file system. That statement would look as follows:

At first glance, this appears to be the simplest method, as it does not depend on access to a cloud provider’s storage system or a separate file server, and users often want to use this to import their data into a cluster. The idea is that you could drop your import file(s) onto a node’s local file system, and the import statement will go and import it. However, in practice, prior to my changes that are being released in CockroachDB 20.1, it wasn’t always quite that simple.

Prior problems with `nodelocal` storage

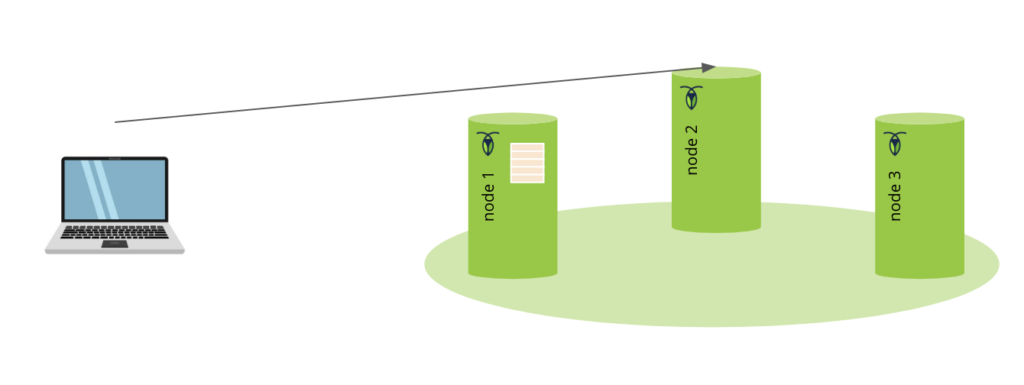

There are a few subtleties worth noting about nodelocal, many of which were sources of confusion for CockroachDB users. First, the nodelocal URL, nodelocal:///path/file.csv, specifies the path to a file, but not the node on which that file is located. CockroachDB is designed as a distributed, multi-node system and IMPORT takes advantage of that by using many nodes to IMPORT data. In older versions of CockroachDB, if a user places the import files on node1, but node2 ends up being assigned to run part of the import progress, it would fail because node2 would be unable to find the file when it looked in its local filesystem.

This behavior was difficult to clarify to users and was often a source of confusion when using nodelocal, particularly since it would “just work” when testing or developing on a single-node cluster but then break in confusing ways when moving into multi-node production deployments.

A similar issue affected BACKUPs to nodelocal, which would also succeed but could not be RESTORE’d. In BACKUP, each node writes its own portion of the data to backup files, which, when using nodelocal, went to each node’s own local file systems.

However, those backups were not restorable, since the backup was completely scattered in fragments across the local filesystems of nodes in the cluster. This too, was a source of confusion to users as they could not restore a successful backup.

Making `nodelocal` work from anywhere in the cluster

The main problem in both cases above is that nodelocal on node1 is not the same as nodelocal on node2 (unless it happens to be configured to point to nfs or something). In order to improve the user experience in the above cases, we planned a two part project. The first and largest part of the project was to create an internode file exchanging service, which could share large files between nodes. We will now support specifying a nodelocal URL as follows:

where 2 is the nodeID of the node whose file system contains the intended import file. In order to support this, the node that needs to read that file would fetch the file from the node where it is stored, and then import it.

This service, which we named the BlobService, also supports writing files and thus allows making restorable backups when running the following:

In the above example, we write the full backup in parallel on every node, each using the BlobService to write directly to the destination path on node1. We can then restore the backup the same way import works, with each node in the RESTORE fetching directly from node1’s file system.

In order to integrate the BlobService into the existing infrastructure around external storage interactions in features like IMPORT and BACKUP, we needed to to implement the ExternalStorage interface, which is implemented for each external storage system we support including GCP, AWS, Azure, HTTP, and of course, nodelocal. This means our BlobService needs to be used by the nodelocal implementation of ExternalStorage which has the following interface:

We decided that we can create a gRPC BlobService running on every node in the cluster. We then created a BlobClient which is able to dial any other node’s BlobService and read files. The BlobClient supports the exact same interface as ExternalStorage, making it easily integratable into our existing logic. These changes, along with the URL changes to add a node ID, fixed the behavior of nodelocal to be much closer to user expectations.

The Last Mile: uploading files to nodelocal

The second part of my project involved making uploading files to nodelocal easier. All of the above improvements significantly improve the experience of using nodelocal, however when we looked at the user-journeys around IMPORT use cases, most users were usually starting with files on their laptop or workstation and were still sometimes getting stuck just getting those files somewhere where their nodes could IMPORT them. In some cases, a user might have a SQL connection through a load-balancer to their cluster, but didn’t have direct filesystem access to write to the nodelocal IO directory. Or even if they did, figuring out where that was and putting files in the right place was a recurring source of confusion. We wanted to mitigate this by allowing users to seamlessly upload files to CockroachDB through the same SQL connection they already have in their SQL shell.

To solve this problem, we leveraged the COPY statement, which uses a special protocol to transport bulk data over to the database. We made some slight changes to how COPY statements are processed to write the data that was sent through to a file in a location specified by the user rather than the usual process that inserts it as rows into a table. This was then wrapped in a command in our Cockroach CLI, such that users can now run the following:

After the addition of this feature, users who want to IMPORT a file on their local machine an entirely new workflow available to them that does not depend on using any external storage services or file servers. They can now upload their import file right from their laptop using the Cockroach CLI and their existing SQL connection string, and then IMPORT that file using nodelocal URI. Hopefully this will make new user’s onboard faster onto CockroachDB, and improve the developer experience of using nodelocal.

Working on Cockroach Labs’s Bulk I/O Team

Hope you enjoyed reading about my project. Working on the Bulk I/O team at Cockroach Labs over the past 4 months has been really rewarding. My project involved cross team collaboration because much of the work span across all the layers of Cockroach’s infrastructure. That is, from the SQL team which maintains the pgwire protocol and in particular its COPY sub-protocol, to the KV and replication layer which maintains and tunes many of our higher throughput streaming gRPC services. It was a great team to get an overview of the different layers of a database system, and how that could work in the world of distributed systems. A huge thanks to my teammates David and Lucy for overseeing my project and guiding me through my internship. Thanks to everyone at Cockroach Labs for an amazing 4 months!